From Image Quality to Image Awareness:

The Next Step-Change in Displays

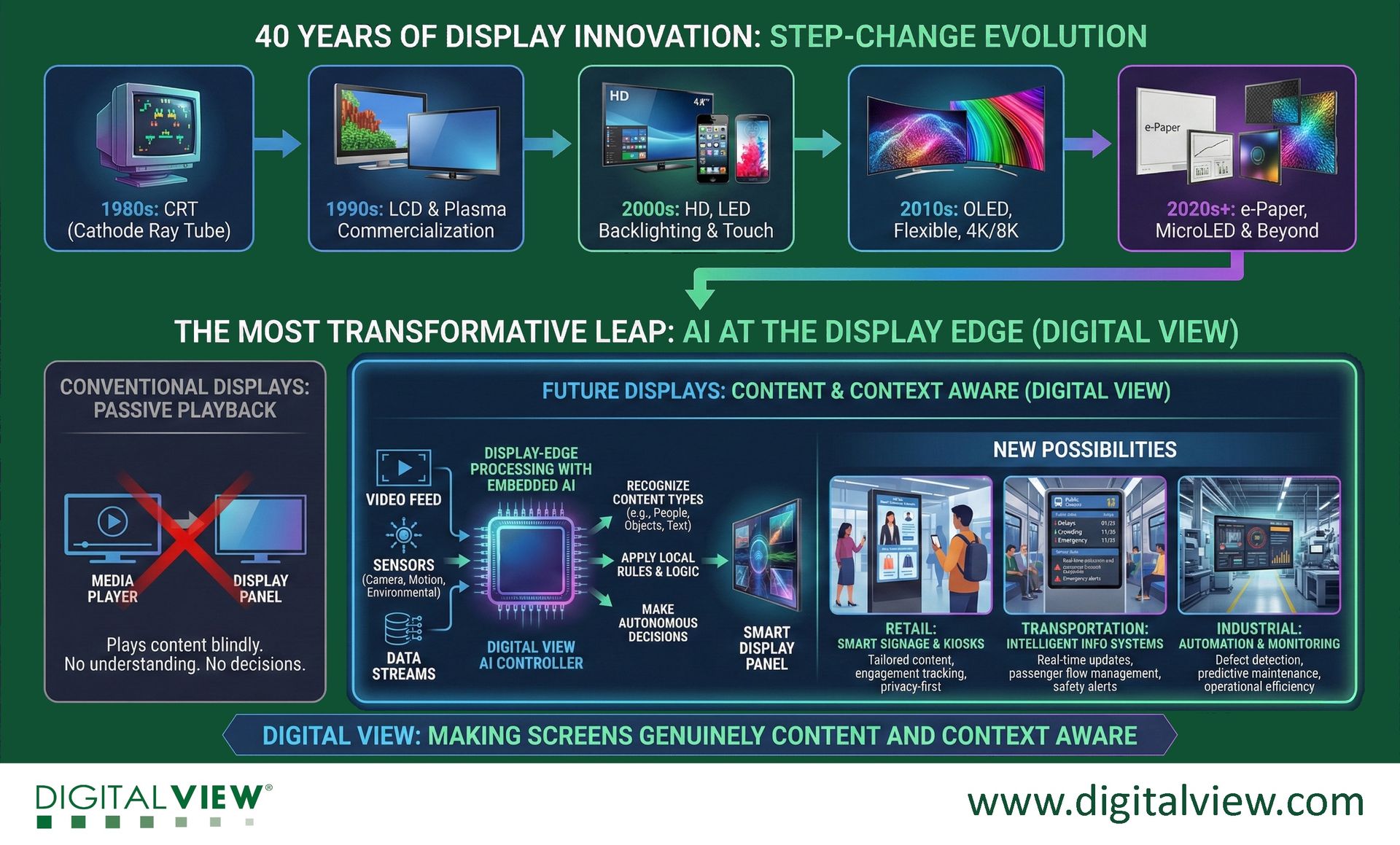

My first involvement with LCD technology was around forty years ago, at a time when CRT was very much the dominant display technology. Since then, display innovation has followed a remarkably consistent path, with each major advance focused on improving image quality.

CRT eventually gave way to LCD and plasma displays, resolution increased from standard definition to high definition and beyond, and color accuracy steadily improved. Panels became thinner, lighter, brighter, and more power-efficient. These advances mattered and enabled entirely new applications, but through all of this progress, the role of the display itself remained fundamentally unchanged: to render pixels as accurately as possible. The display was an endpoint in the system.

That assumption is now changing.

What is emerging is not simply another refinement in image quality, though that development continues, but a shift in what a display is and how it participates in a system. Displays are on the brink of becoming image aware, capable of interpreting what they are showing and responding to context locally at the display edge. This represents much more than an incremental improvement.

An image-aware display does more than present content. It can recognize visual elements such as text, objects, or people, incorporate inputs from sensors and external data streams, apply local rules and logic, and act autonomously within defined constraints. At that point, the display is no longer passive playback hardware. It becomes an active component of the system itself.

Technology & market shifts

This shift is happening now because two developments are converging. Embedded processing has reached a level of maturity where meaningful inference can be performed reliably at the edge, without constant reliance on cloud infrastructure. At the same time, many real-world display deployments now require more than presentation. Industrial environments, transportation systems, and public infrastructure increasingly depend on displays that can respond to changing conditions in real time. Latency, privacy, determinism, and resilience all favor local decision-making, and image awareness at the display edge directly addresses these requirements.

This evolution is often described as “AI in displays,” but that framing misses the point. AI is an implementation detail; image awareness is the capability. The value lies not in the model itself, but in the outcome: understanding content and context, acting locally and predictably, and integrating the display into broader system logic. Whether that awareness is achieved through neural networks, classical vision techniques, or hybrid approaches is secondary to the fact that the display can participate rather than simply present.

The implications of image-aware displays are practical and immediate. In industrial environments, displays can adapt to operational states, highlight anomalies, and support predictive maintenance workflows. In transportation systems, they can respond dynamically to real-time conditions, safety events, or passenger flow. In retail and public spaces, displays can tailor content while preserving privacy by keeping analysis local. In each case, the display moves closer to the decision boundary of the system instead of remaining a passive output device at the edge.

A genuine step change

Every previous era of display innovation focused on improving how well images were shown. This shift changes what displays do. That is why it represents a genuine step-change rather than another incremental advance.

At Digital View, this perspective shapes how we think about display controllers and embedded platforms, not as isolated components, but as enablers of image-aware systems at the display edge. Displays are no longer just screens. They are becoming participants.

Next Steps

- See Digital View AI boards, ALC-4096-AIH and AI-100 (stayed tuned for more)

- Visit the Digital View AI at the Display Edge website

- Contact us for products, developments, or discussion